| 13h30 | Christian TAMBURINI | CNRS MIO | Presentation of the new Research Group (GdR) Ocean and Seas (Omer) of the CNRS, for the understanding, conservation and safeguard of the ocean. |

| 13h50 | Vincent CREUZE | CNRS LIRMM | Presentation of the water-sea-ocean axis of the LIRMM (Univ. Montpellier/ CNRS) |

| 14h10 | Nicolas LALANNE | ECA | Multiple approach for increasing the navigational robustness of a ROV |

| 14h30 | Minh Duc HUA | CNRS I3S, Equipe OSCAR | Dynamic visual servoing of underwater vehicles |

| 14h50 | Nicolas CADART | Notilo plus | Les développements du Seasam |

| 15h00 | Coffee Break | ||

| 15h40 | Michel THIL | INPP | Point sur la réglementation française de la plongée professionnelle, les formations à l’INPP |

| 16h00 | Loica AVANTHEY / Laurent BEAUDOIN | Equipe SEAL EPITA, Paris | Survey of the surf zone with light means: an overview of the work of the SEAL team |

| 16h20 | Jean Marc TEMMOS | Semantic TS | Presentation of the Merritoire project (merritoire.com) – a catalyst for projects based on 3D seabed modeling |

| 16h20 | Edin Omerdic, | University of Limerick | Innovative Engineering based on Visualisation and Geometric Observations |

| 16h40 | Maxime FERRERA | IFREMER | Vision-based 3D reconstruction at Ifremer |

| 17h00 | Claire DUNE | COSMER | Clôture du Submeeting 2022 |

Multiple approach for increasing the navigational robustness of a ROV

Nicolas Lalanne, Ingénieur de recherche – ECA Robotics

We present here methods from the literature that would allow to integrate automatic modes of operation of ROVs (remotes operating vehicle), to be robust to possible communication problems, or to give information easily exploitable by humans in complex natural environments.

ROVs can be equipped with visible cameras and FLS (forward looking sonar) sensors to perceive the local environment in front of the robot. The position of the vehicle can be measured using an ultra-short baseline acoustic positioning system (USBL).

To locate an ROV in space, a pressure sensor is used to measure the immersion of the vehicle and a depth sounder to determine its altitude with respect to the sea floor, while proprioception can be performed by an inertial measurement unit (IMU) to estimate the accelerations and rotational velocities achieved by the vehicle.

The guidance involves the establishment of a trajectory calculated over time. We present a way to compute this trajectory is from an odometry measurement, realized by the FLS, which updates a Kalman unscented filter (UKF) based on the IMU measurements for the calculation of the navigation predictions.

FLS odometry employs Fourier correlation between two sonar images to perform registration and then a global trajectory optimization algorithm. In parallel to odometry, a real-time morphological analysis algorithm can be applied to FLS images to detect landmarks and objects of interest. The navigation and detections achieved by these methods are presented in this study using simulated data from a simulator coupled to the Unity game engine

Dynamic visual servoing of underwater vehicles

Minh Duc HUA, CR CNRS, Equipe OSCAR CNRS I3S, Sophia Antipolis

Inspired by inspection and surveillance applications of underwater drones, the OSCAR team of the I3S laboratory has developed its underwater activities

around the theme ‘dynamic visual servoing’ of fully-actuated or underactuated underwater robots. We present some recent results on vision-based ‘Dynamic Positioning’ and ‘Pipeline or Cable Detection and Tracking’ problems using a minimum of low-cost sensors.

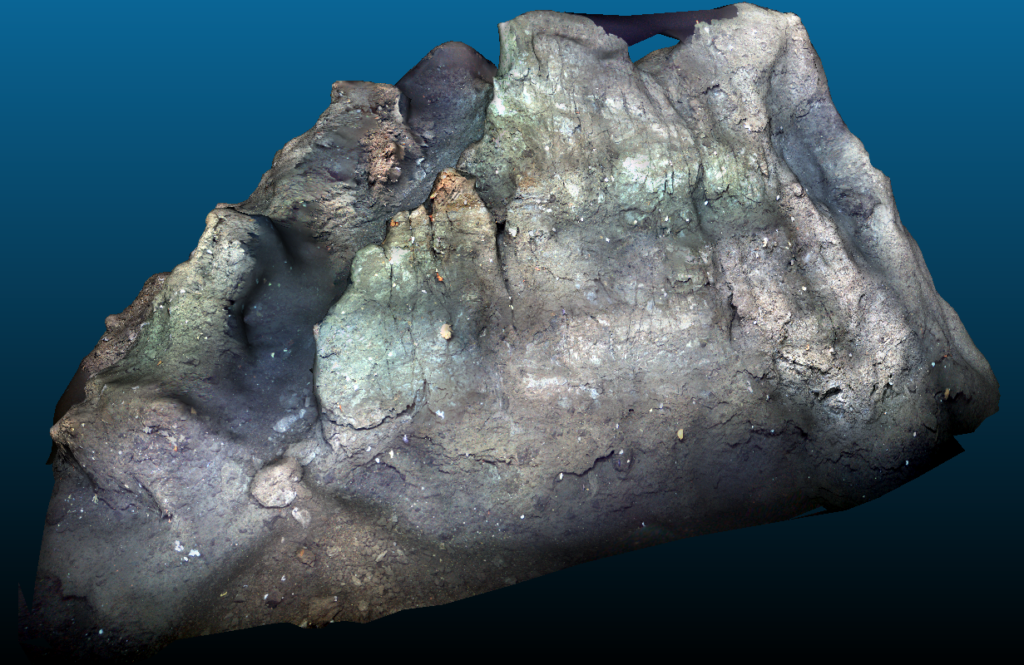

Vision-based 3D reconstruction at Ifremer

Maxime Ferrera, Ingénieur de recherche, équipe PRAO, IFREMER La Seyne sur Mer

In recent years, advances in the field of computer vision has led to the development of high resolution 3D recontruction algorithms from image collections.

In an underwater context, these new methods have received a warm welcome from the scientific scientific community because of the possibility it offers in visualizing global 3D models of areas of interest that were until now limited to very local observation through photos or videos because of the inaccessibility and hostility of this environment.

This presentation will discuss the developments made at Ifremer in 3D reconstruction from collections of images or videos acquired by cameras embedded on underwater robots. In the first part of this talk, the open-source software Matisse developed to realize 3D reconstructions in delayed time and specifically designed to handle underwater images will be presented. The second part will be dedicated to recent work in visual SLAM and real-time 3D reconstruction with an overview of the first results obtained.

Surrvey of the surf zone with light means: an overview of the work of the SEAL team

Loica AVANTHEY& Laurent BEAUDOIN, EPITA, équipe SEAL

The presentation will give an overview of the issues addressed by the SEAL team

for the study of the shallow seabed (0-100 m) and will introduce the robots, sensors and methodologies developed or under development to address them.

Presentation of the water-sea-ocean axis of the LIRMM (Univ. Montpellier/ CNRS)

Vincent CREUZE, CNRS LIRMM, Université de Montpellier

In 2021, the LIRMM (University of Montpellier / CNRS) has set up a transverse axis

bringing together the Computer Science, Robotics and Microelectronics departments around applications related to water, the sea or oceans. This presentation will give an overview of the work currently being carried out at the LIRMM on subjects as varied as the analysis of analysis of underwater images by deep learning, fish tagging and tracking of marine species, or underwater robotics. (crédit photo : LIRMM)

Innovative Engineering based on Visualisation and Geometric Observations

Edin Omerdic (BEng., MEng., PhD), Centre for Robotics and Intelligent Systems, Department of Electronic and Computer Engineering, University of Limerick, Limerick, Co. Limerick, Ireland

The first part of the Lecture will provide a comprehensive overview of Centre for Robotics and Intelligent Systems, University of Limerick, including history, funding, people, and current projects.

The second part of the Lecture will present three Case Studies: (1) OceanRINGS+ , (2) Mixed Reality User Interaction Interface, (3) Innovative Teaching Methods.

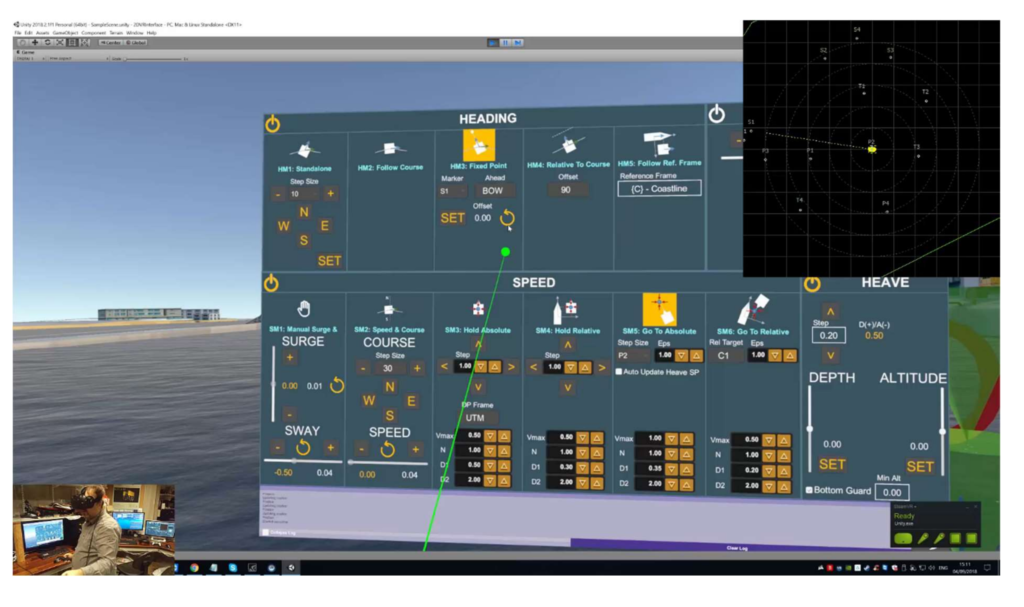

Case Study 1: OceanRINGS+

Built to make complex subsea tasks simple, OceanRINGS+ combines state-of-the-art navigation equipment with the best control algorithms and emerging VR technologies to provide smart, intuitive and easy to use user interface, enabling average pilots to achieve exceptional results. This Case Study highlights the main features of the system, presents selected results of test trials and discusses implementation issues and potential benefits of the technology.

Case Study 2: Mixed Reality User Interaction Interface

The new Mixed Reality User Interaction Interface for smart Cyber-Physical Systems (CPS) belongs to a class of “mixed reality” systems, which include both classical user interface elements, but also a set of novel “natural” multi-modal user interfaces, based on exploration and integration of the state-of-the-art emerging technologies, including VR headsets, 6 DoF input devices, hand gesture recognition devices and touch screens. The user interface involves 3D interaction such that tasks are performed based on spatial tracking and direct interaction in a virtual 3D spatial context. This multi-modal interface is an extension of the OceanRINGS+ 3D visualization interface, proposed and validated through a series of sea trials. User wearing spatial tracking device and VR headset is placed in immersive virtual environment mimicking real world. He can see his hands in virtual world and interact with objects around him. This Case Study describes the main features of the interface, presents evolution of the system over time and discusses future applications.

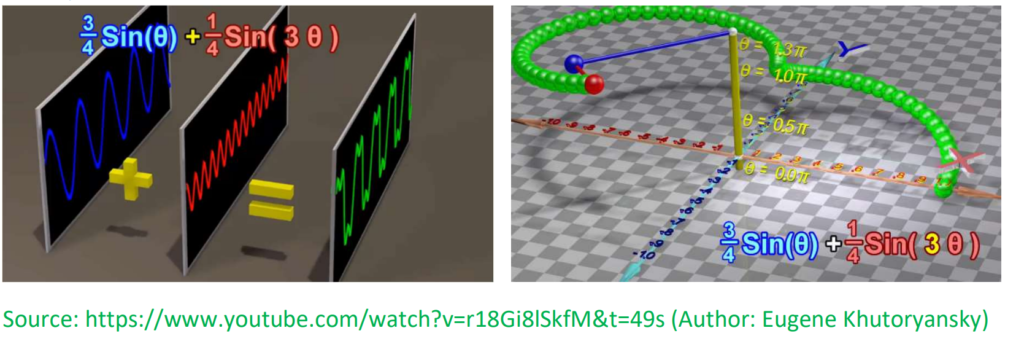

Case Study 3: Innovative Teaching Methods :

Ex1 : Least-Square Data Fitting,

Ex2 ; Fourrier Series

Ex3 : Laplace Transformation

Ex4 : Ship Course Keeping: Fuzzy Autopilot vs Neural Network Autopilot

Ex 5 : Two Tanks